Using Deep learning to integrate range partitioning into SAP systems

The principle of partitioning is very simple: It means splitting a large table in smaller parts, called partitions, grouping and separating the rows of the table based on the content of one or more columns. Rows fulfilling the same criteria are placed in the same partition. Partitioning can provide tremendous benefit to a wide variety of applications by improving performance, manageability, and availability.

- By limiting the amount of data to be examined or operated on, and by providing data distribution for parallel execution, partitioning provides multiple performance benefits.

- Partitioning enables you to partition tables and indexes into smaller, more manageable units, providing database administrators with the ability to pursue a divide and conquer approach to data management.

- Partitioned database objects provide partition independence. This characteristic of partition independence can be an important part of a high-availability strategy. For example, if one partition of a partitioned table is unavailable, then all of the other partitions of the table remain online and available. The application can continue to execute queries and transactions against the available partitions for the table, and these database operations can run successfully, provided they do not need to access the unavailable partition.

- Partitioning enables you to partition tables and indexes into smaller, more manageable units, providing database administrators with the ability to pursue a divide and conquer approach to data management.

- Partitioned database objects provide partition independence. This characteristic of partition independence can be an important part of a high-availability strategy. For example, if one partition of a partitioned table is unavailable, then all of the other partitions of the table remain online and available. The application can continue to execute queries and transactions against the available partitions for the table, and these database operations can run successfully, provided they do not need to access the unavailable partition.

Partitioning is thus is a key tool for building multi-terabyte systems or systems with extremely high availability requirements and is standard feature of most database systems.

- IBM DB2 partitioning

- MySQL partitioning

- Oracle partitioning

- SQL Server partitions

- Sybase ASE 15.0 partitioning

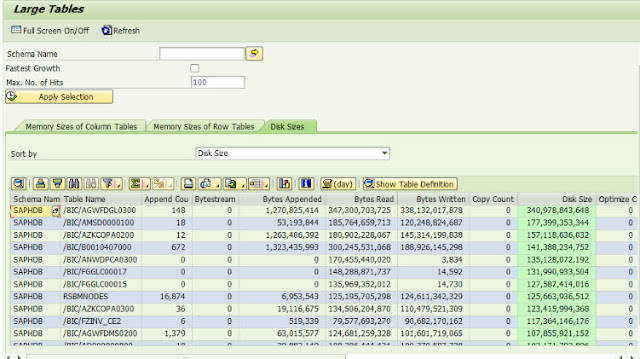

In SAP systems, partitioning has been used in BW (Business Warehouse) systems, since the very first BW systems were released.It is however not used by default in any other SAP business suite including SAP ERP system,SAP CRM,SAP MDM, etc. for mainly historical reasons. The SAP ERP system is based on the architecture of the R/2 system, and at the time the first R/3 releases were shipped; partitioning technology was not available on UNIX based databases and was rarely used on even on host-based systems. The original SAP system was therefore designed on the basis of the database technology available 30 years ago. Let us compare the common database sizes of today with those used 30 years ago. Initially SAP ERP systems contained only basic functionality and it was common for databases to be less than 100GB in size. Since then more and more functionality has been introduced to the R/3 system and database sizes have increased. Today “small" SAP installations commonly have database sizes of around 1TB whereas SAP customers, using special functionalities such as the SAP retail module, SD are running databases of 50-100TB and more.

Such large databases are linked with several challenges:

- Response times to user queries and batch processing must be guaranteed. This becomes more and more difficult because the tables are constantly growing in size.

- Old data must be archived, but archiving can have a negative impact on database performance. Deleting data from the database generates a huge transaction load, affecting normal data processing.

- Archiving very large tables can be a never-ending job. Once archiving is complete, follow-up tasks are needed either to rebuild fragmented indexes and/or to free up space in the tablespaces. These additional tasks occupy maintenance windows for the system, reducing overall availability.

- Processing large table uses up much more time and resources on the database as well as on the application servers.

- Response times to user queries and batch processing must be guaranteed. This becomes more and more difficult because the tables are constantly growing in size.

- Old data must be archived, but archiving can have a negative impact on database performance. Deleting data from the database generates a huge transaction load, affecting normal data processing.

- Archiving very large tables can be a never-ending job. Once archiving is complete, follow-up tasks are needed either to rebuild fragmented indexes and/or to free up space in the tablespaces. These additional tasks occupy maintenance windows for the system, reducing overall availability.

- Processing large table uses up much more time and resources on the database as well as on the application servers.

The real challenge for integrating partitioning into an SAP system is to find suitable partitioning keys. This becomes even more difficult due to

- The very large no of tables in SAP business suite applications in the order of 100K to 125K tables.

- Complexity of the application

- The unavailability of data model

- Not a direct relationship of the business transaction to the backend query

Deep learning can help us here.

It can integrate with the database engine and read all the queries hitting the database. It can then look at which tables were most accessed over a period of time and which fields in the tables. It can then come up with a combination of columns for the partition key. It can then check the skew of data with the set combination to determine if it is a good choice and reiterate the process. Over time the AI system can learn the usage pattern of the application and will be able to predict accurate partition keys.

Once the table is partitioned, another interesting aspect of using AI for maintenance is that the AI engine can intelligently attach and detach table partitions based on usage. It can on the fly detach (roll-out) from a range-partitioned table via the ALTER TABLE ... DETACH PARTITION INTO statement. Afterwards the detached partition appears as a new table with table name. No data movement and consequently no logging are required for this operation. Likewise, an existing table can be attached (roll-in) to a range-partitioned table via ALTER TABLE ... ATTACH PARTITION if it is compatible with the partitioned table.

Due to the flexible assignment of tablespaces to the partitions the large object data of each partition can be placed in a separate tablespace. This does not only allow that a table growth beyond the maximum size limits of a tablespace, it makes it also possible to perform operations like BACKUP and RESTORE on partition-level. The AI engine can also monitor defragmentation or Cluster ratios and perform automatic reorgs just in the necessary partition (the partition must be detached first and attached again after the REORG) thus significantly reducing the outage or batch windows for database maintenance compared to traditional approach.

If you would like to try our system for your database please email at info@sublimeai.com.

Appendix: Creating Range-Partitioned Tables in the ABAP DDIC with DB2.

To convert a non-partitioned table to a range-partitioned table, the conversion process of the ABAP DDIC can be used. To demonstrate this we use the CUSTOMERS table with a slight modification. This able belongs to the ABAP example objects and is available in every ABAP system. To have at least one column of type INTEGER in this table, the field POSTCODE is changed in the ABAP DDIC (SE11) to the predefined DDIC type INT4. This change is again a good example for a conversion. To perform it proceed as follows:

- Call SAP transaction SE11 and edit the table CUSTOMERS

- Change the data type of the column POSTCODE from CHAR(10) to the predefined DDIC type INT4 by choosing the “Predefined Type” pushbutton

- Save and activate the table. A message like “Structure change at field level (convert table CUSTOMERS)” appears

- From the “Utilities” menu, choose “Database Object → Database Utility”. You are redirected to SAP transaction SE14, the database utility

- From the “Extras” menu, choose “Force Conversion” and accept the message box. As a result the conversion is performed.

Comments

Post a Comment