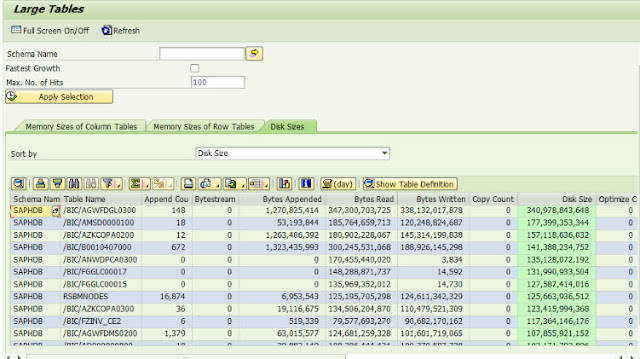

Large SAP tables which are good candidates for partitioning

Not all tables are good candidates for partitioning. The tool we have developed runs through multiple checks for large tables to identify the right candidate. Generally if the answer is yes to the following questions, table partitioning may be a suitable strategy for improving performance. 1. Is the database large enough? 2. Is the table large enough? 3. Do your reports and queries have distinguishable access patterns? 4. Are you experiencing slowness and performance degradation? 5. Is the skew factor for the data distribution under reasonable limits? Below are some of the large tables which have been candidates for table partitioning amongst our SAP customers. Large material master tables MARA Material Master Table MARC Plant Material Data MARO...